A time series is a collection of data points gathered over time that can be used to analyse & forecast trends and patterns. Working with time series data requires caution because one wrong step can lead to misleading results. The three things to consider when working with time series data are as follows:

Spurious Correlation:

When we have two time series datasets that are highly dependent on the passage of time. Examples include stock market index data over time and the number of goals scored by a footballer over time. Because the factors are highly dependent on time, the values for both datasets are bound to rise in the long run, say 5-6 years. If you calculate the correlation between them, you'll find that it's extremely positive. However, this does not imply that the variables, stock market data and football goals, are in any way related. The increase in values is due to the passage of time, which results in a positive correlation. Because there is ultimately no casual relationship between them, we are unable to determine if they are related in any manner or not. There is no link of any type between stock market data and a football player's goals scored.

Series or Order of Data:

The word "series" is used in Time Series for a specific reason. The reason is that while working with time series, the order of the data is crucial. We cannot randomly shuffle the data and take out a fragment of data to perform our analysis on it. The order of the data is critical, and we must take it seriously. We must be extra cautious when performing any training/test or cross validation because these steps require some form of series shuffling, which somehow diminishes the core concept behind the series.

Duration of Data:

Consider a scenario in which you want to perform some sort of time series forecasting. You might be tempted to do this with only a few years' worth of data. But keep in mind that you will need at least three to four years of data to perform any kind of reasonable time series forecasting because only if you have that much data, you will be able to observe seasonality.

Let’s understand what this term seasonality means. Seasonality in time series refers to the presence of repeating patterns over a set period of time. These patterns may be impacted by regular occurrences such as the season or holidays; Seasonality can be seen in a variety of time series data, including product sales, website traffic, and weather patterns. These patterns can have a substantial impact on how data is interpreted and the accuracy of forecasts.

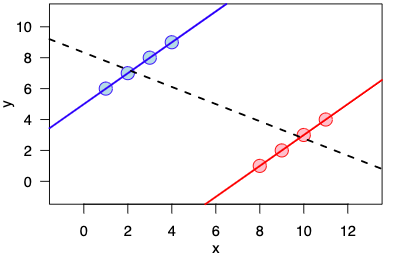

We have to be careful not only in using data for a shorter period but also be very careful while using the data of 7 or 8 years. The reason to be extra cautious in a longer period is the structural breaks which could happen in the long run. A structural break in a time series refers to a significant modification of the method used to produce the data, such as a sudden change in trend or a modification in the correlation between variables. Time series data analysis and forecasting may be significantly impacted by these breaks.

These above 3 points must be considered to improve your time series analysis forecasting.